Ref. No. [UMCES] CBL 2016-012

ACT VS16-03

30

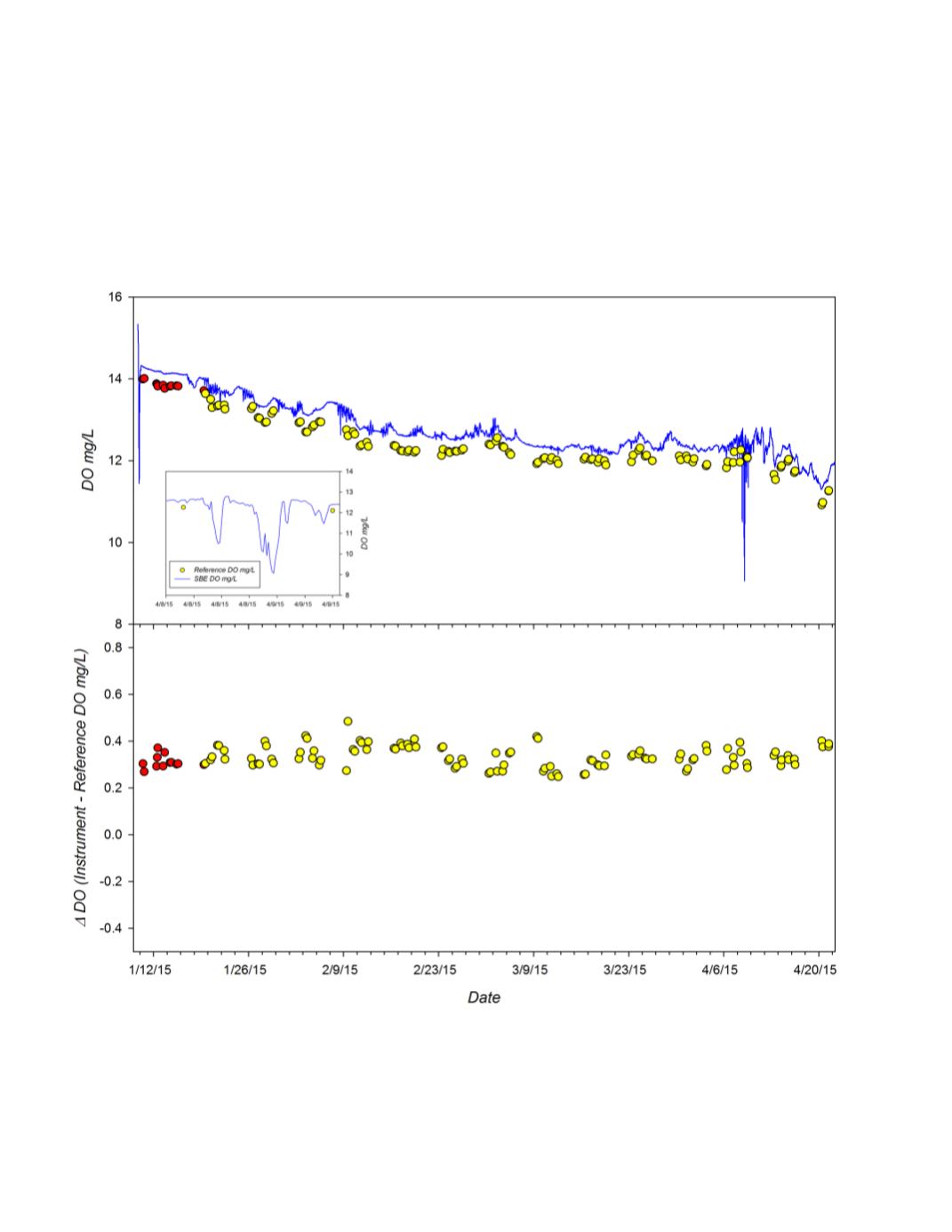

The time series of the difference between instrument and reference DO measurements for

each matched pair (n=118 observations) is given in the bottom panel of figure 2. The average and

standard deviation of the measurement difference over the total deployment was 0.333 ± 0.447

mg/L with a total range of 0.248 to 0.485 mg/L. There was no measurable trend in instrument

offset during the entire deployment (linear regression: r

2

=0.003; p=0.58) as a result of either

biofouling effects or calibration drift.

Figure 2.

Time series of DO measured dete

c

ted by SBS HydroCAT deployed during the 15 week Great

Lakes field trial.

Top Panel

: Continuous DO recordings from instrument (blue line) and DO of adjacent

grab samples determined by Winkler titration (red circles; yellow circles represent adjusted reference values

).

Bottom Panel

: Difference in measured DO relative to reference samples (Instrument DO mg/L – Ref

DO mg/L) observed during deployment.

Insert:

Close up of excursion that occurred 4/8-4/9. No reference

samples were collected during this time period.